End-to-end guide 2025: design, implement, optimize, secure, and operate a modern data warehouse using Microsoft Fabric, therefore helping teams deliver reliable analytics.

Context Why Data Warehousing in Fabric matters for modern analytics

Data Warehouse unifies Lakehouse storage, SQL-serving endpoints, notebooks, and pipelines into a single platform. Consequently, teams gain simplified operations and faster time-to-insight. Moreover, with Delta Lake transactional guarantees, warehouses built on Fabric support ACID semantics, time-travel, and efficient upserts, which are crucial for reliable analytics.

Summary: choose Data Warehouse to reduce tool sprawl, and therefore lower operational overhead while increasing analytic agility.

Stack Architecture and components

When building Data Warehouse, include these core components; they work together to provide an end-to-end system:

- Lakehouse storage for raw, refined, and curated Delta tables, acting as the canonical data store.

- SQL Warehouse endpoints for low-latency, high-concurrency analytics through a T-SQL compatible surface.

- Notebooks for ELT/ETL and heavy transforms that execute on Spark at scale.

- Data Pipelines to orchestrate and schedule notebook and dataflow activities reliably.

- BI semantic layers such as Power BI datasets that enable governed self-service reporting.

Additionally, Fabric integrates governance, security, and monitoring so that Data Warehouse can meet enterprise requirements for compliance and performance.

Plan Designing layers, schema, and patterns

Design your Data Warehouse around functional layers. For example, the layered approach below supports incremental processing and clear separation of concerns:

- Raw layer — preserve original files immutably in Lakehouse raw zones for auditability.

- Staging / Bronze — perform lightweight cleanses and schema harmonization.

- Refined / Silver — normalize, dedupe, and apply business rules.

- Curated / Warehouse — denormalized fact and dimension tables tuned for SQL queries and reporting.

Modeling guidance

Prefer star schemas for reporting, and therefore design fact tables with surrogate keys when necessary. Moreover, choose partitioning and Z-ordering strategies that align with frequent predicates to reduce I/O and latency.

Non-functional priorities

Ensure predictable SLAs, cost control, reproducibility, and strong auditability in implementation.

Build Implementation patterns for Data Warehousing in Fabric with SQL and Delta

Implement transforms with notebooks for scale, but expose results via SQL Warehouse so analysts can run fast queries. Below are practical SQL and merge examples that illustrate recommended patterns.

Create a curated fact table

CREATE TABLE curated.sales_fact USING DELTA PARTITIONED BY (sale_date) AS SELECT order_id, customer_id, product_id, CAST(sale_date AS DATE) AS sale_date, CAST(quantity AS INT) AS quantity, CAST(amount AS DECIMAL(18,2)) AS amount FROM silver.sales_raw;

Delta merge for incremental loads

MERGE INTO curated.sales_fact t USING updates u ON t.order_id = u.order_id WHEN MATCHED THEN UPDATE SET * WHEN NOT MATCHED THEN INSERT *;

Therefore, use Delta merge to make upserts idempotent and reliable, and schedule these operations using Data Pipelines for regular refreshes.

For orchestrated patterns that combine notebooks and pipelines, consult the Data Pipelines guide and Transform Notebooks tutorial linked in references below.

Tune Performance tuning for cost control

Warehouse performance depends on physical design and platform features. Consequently, apply the following optimizations to improve query speed and lower cost:

- Partition wisely — partition curated tables by high-selectivity columns such as date so queries scan fewer files.

- Optimize and Z-Order — compact small files and use Z-ordering on commonly filtered columns to accelerate predicate queries.

- Materialized aggregates — precompute heavy aggregations or create summary tables for frequent dashboard queries.

- Warehouse sizing and result caching — select Warehouse size for concurrency needs and leverage result caching where applicable.

- Monitor and tune — use Fabric diagnostic tools to find expensive queries and then refactor or index accordingly.

-- Example: optimize a Delta table and Z-ORDER OPTIMIZE curated.sales_fact ZORDER BY (customer_id)

Finally, schedule compaction during off-peak windows to avoid contention with BI queries, and therefore control compute costs.

Run Operationalizing Data Warehousing in Fabric: orchestration and CI/CD

Operational excellence means reliable schedules, tests, and observability. For example, implement the following practices:

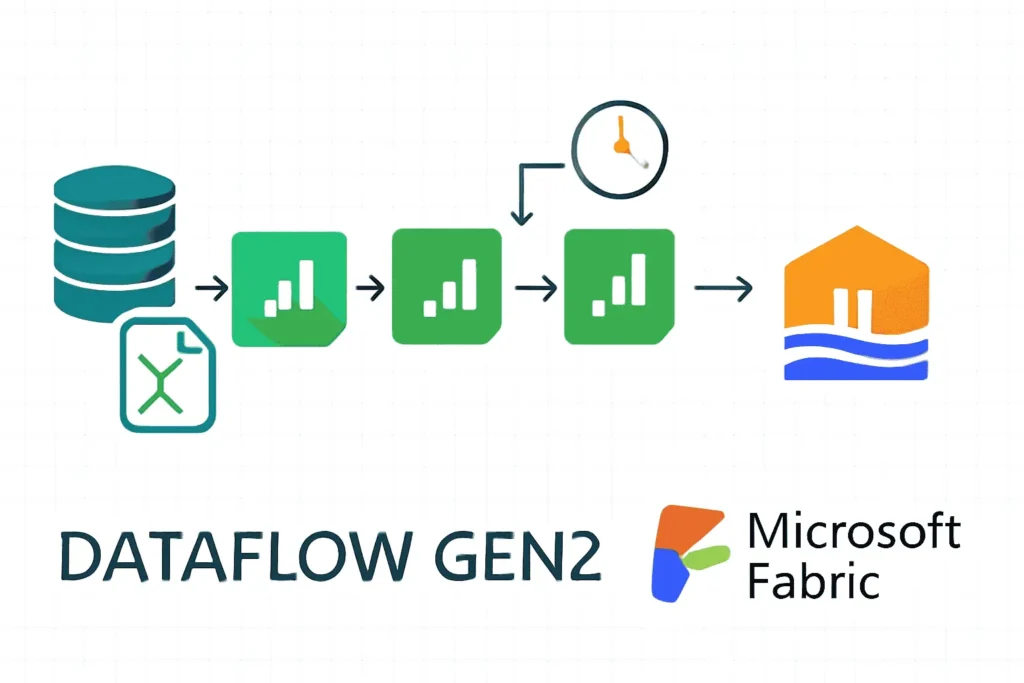

- Pipeline-driven ELT — orchestrate notebooks and dataflows with retry and alerting policies.

- Parameterization — pass run_date and environment as parameters so notebooks are reusable across dev/stage/prod.

- Testing and CI — run integration tests on representative sample datasets in pull requests to prevent regressions.

- Deployment gating — apply approvals and automated checks before promoting transformations to production.

Consequently, capture run metadata, row counts, and table versions to support incident triage and SLA reporting.

Trust Governance and security for Data Warehousing in Fabric

Security and governance are integral to any implementation. Therefore, adopt the following controls:

- Microsoft Entra RBAC — manage identities and workspace permissions with least privilege.

- Column-level masking — mask PII or expose masked views to downstream consumers.

- Lineage and catalog — register datasets, notebooks, and pipelines so you can trace data provenance and perform impact analysis.

- Retention and time-travel — use Delta time-travel and retention policies to meet recovery and compliance needs.

Moreover, restrict write access to curated tables and require PR-based reviews for schema changes to avoid accidental breaking changes.

Apply Use cases and migration recipes for Data Warehousing in Fabric

Below are actionable use cases that show how organizations apply Data Warehousing in Fabric to real problems:

Modern BI warehouse for retail using Data Warehousing in Fabric

For example, ingest POS and ecommerce events into raw Lakehouse, run nightly Spark notebook transforms to produce curated fact tables, and expose them to Warehouse for Power BI dashboards. Consequently, business users receive consistent metrics with predictable performance.

Lift-and-shift migration to Data Warehousing in Fabric

- Export schema and snapshots from the legacy warehouse.

- Ingest historical data into the Lakehouse raw zone as Parquet files.

- Recreate dimension and fact tables with Delta, testing row counts and checksums.

- Cut over BI models to the Fabric Warehouse endpoint after validation.

For orchestration patterns, integrate with the Data Pipelines guidance here: Data Pipelines in Fabric, and for transform examples refer to the Transform Notebooks article: Transform Data Using Notebooks.

FAQ Frequently asked questions about Data Warehousing in Fabric

What role does Delta Lake play in Data Warehousing in Fabric?

Delta Lake provides ACID transactions, time-travel, and efficient merges; therefore, it is the recommended storage format to ensure reliability and reproducibility.

When should I use notebooks vs. Warehouse SQL for transformations?

Use notebooks for heavy, distributed transforms and feature engineering where Spark scale is needed. In contrast, use Warehouse SQL for low-latency serving and analytical queries; consequently, the two complement one another.

How can I make schema changes safely in a Data Warehousing in Fabric setup?

Adopt schema evolution best practices: test changes in staging, use feature-flagged releases, keep schema migrations in version control, and require reviews to prevent breaking downstream consumers.

How do I control costs when running a Data Warehousing in Fabric deployment?

Control costs by optimizing file layout, scheduling compaction, choosing appropriate Warehouse sizes for concurrency, and using incremental transforms rather than full-table rewrites.

Where should I read next to implement Data Warehousing in Fabric?

Start with the Fabric Lakehouse tutorial and the Data Pipelines guide for step-by-step examples: Fabric Lakehouse Tutorial and Data Pipelines in Fabric, and then review the Transform Notebooks article for ELT patterns: Transform Data Using Notebooks.