DP-600 Direct Lake Performance Optimization Deep Dive: OneLake vs SQL Endpoint Fallback

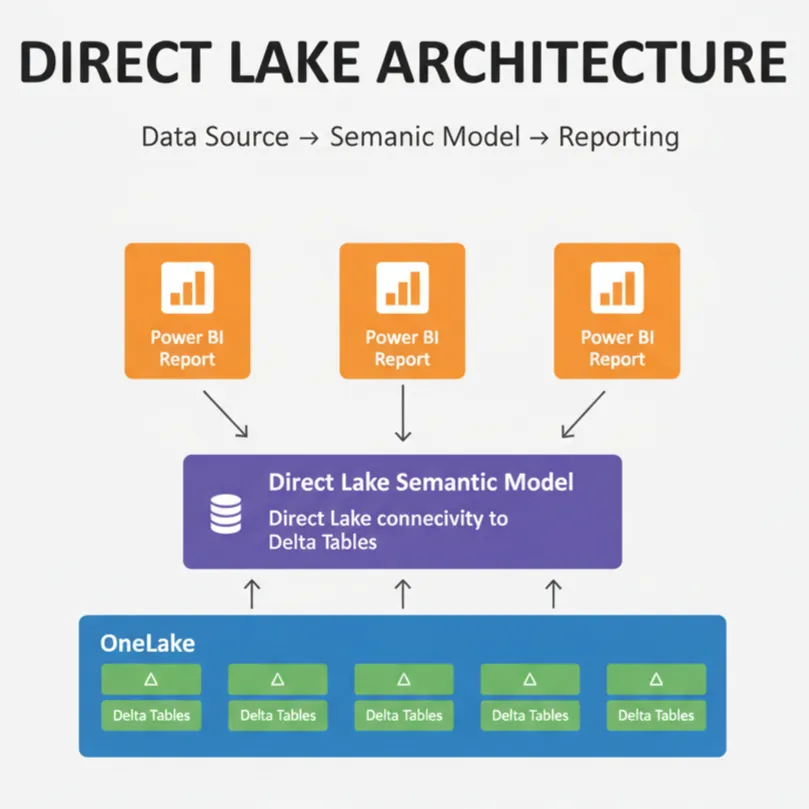

The DP-600 Direct Lake performance optimization topic starts with one critical architectural decision: do you connect your semantic model to Direct Lake on OneLake or to Direct Lake via a SQL endpoint. Because this choice directly influences whether your reports consistently run with Import-like speed or frequently fall back to slower DirectQuery behavior, it appears often in exam scenarios. Getting this decision right is also the foundation for every other DP-600 Direct Lake performance optimization technique you apply later in the model.

When you use Direct Lake on OneLake, the semantic model reads Delta-Parquet data straight from OneLake storage through the VertiPaq engine. As a result, there is no SQL analytics endpoint in the path and, therefore, no possibility of SQL-based fallback. This path gives you predictable sub‑second query performance, even when fact tables grow into the billions of rows, as long as the underlying Delta tables are well optimized.

However, when you configure Direct Lake against a lakehouse or warehouse SQL endpoint, the engine first asks the SQL endpoint for table metadata, security checks, and view definitions. In many real‑world DP‑600 scenarios, this extra layer introduces fallback triggers such as Row‑Level Security defined in SQL, non‑materialized views, or capacity guardrails being exceeded. Once a trigger fires, the query silently switches from VertiPaq to DirectQuery, and response times rise dramatically, which is exactly what you want to avoid in serious Direct Lake performance optimization work.

DP-600 Direct Lake Architecture Decision Table: OneLake vs SQL Endpoint

| Decision Criteria | Direct Lake on OneLake | Direct Lake via SQL Endpoint | DP-600 Optimization Recommendation |

|---|---|---|---|

| Performance Consistency | Zero SQL fallback risk, always VertiPaq | RLS and views may trigger DirectQuery | Choose OneLake for strict SLAs |

| Scalability | Supports 10B+ row fact tables | Best under 1.5B rows per table | Use OneLake for very large models |

| Security Model | Relies on OneLake item‑level security | Supports SQL RLS plus semantic RLS | Use SQL endpoint if SQL RLS is mandatory |

| View Usage | Materialized views recommended | Non‑materialized views allowed but risky | Prefer materialized views to avoid fallback |

| DP‑600 Focus Area | High‑scale OneLake architecture | Hybrid lakehouse/warehouse designs | Understand both for the exam |

From an optimization specialist’s point of view, Direct Lake on OneLake is ideal when you own the lakehouse design and can align security with OneLake permissions. In that case, you remove a whole class of fallback causes and keep the DP‑600 Direct Lake performance optimization story very clean. On the other hand, if you must respect existing warehouse security or complex SQL logic, connecting through a SQL endpoint may be unavoidable, and you then control risk by tightening your DirectLakeBehavior setting.

DP-600 Direct Lake Behavior Configuration for Fallback Control

In production environments that depend on stable response times, many teams choose DirectLakeBehavior = "DirectLakeOnly". This configuration forces issues to appear early during testing rather than hiding behind slow DirectQuery calls. It also aligns with DP‑600 expectations, where you are asked to design solutions that are transparent and predictable rather than silently degrading under load.

DP-600 Direct Lake Performance Optimizations: Improve DAX Performance on Direct Lake Models

Once the correct architecture is in place, the next task in DP-600 Direct Lake performance optimization is to tune both the Delta tables and the DAX expressions. In practice, most slow models fail not because of capacity alone but because table layouts and measures do not work in harmony. By aligning storage patterns with query patterns, you can unlock the real potential of Direct Lake and deliver the responsiveness the DP‑600 exam expects you to design for.

First, focus on the physical structure of your Delta tables. For high‑value Fabric workloads, aim for row groups between one million and sixteen million rows, with an average close to four million. At the same time, keep the number of Parquet files per partition low, ideally under two hundred, so that VertiPaq touches fewer compressed segments for each query.

Direct Lake Delta Optimization Script for DP-600 Practice

Next, apply DAX patterns that let Direct Lake do efficient segment elimination. For example, replace text keys with integer surrogate keys, push filters into visual slicers instead of using heavy FILTER expressions, and start with simple aggregations like SUM before you introduce iterators. When combined with a well‑optimized table layout, these changes often cut query duration from several seconds down to a fraction of a second.

Improve DAX Performance with High‑Cardinality Reduction

Swap CustomerName and other long strings for integer IDs such as CustomerKey. This simple DP‑600 technique shrinks dictionary sizes, keeps more data hot in memory, and reduces the cost of joins across Direct Lake fact tables.

Use Predicate Pushdown for Faster Direct Lake Queries

Whenever possible, move filters to the visual level so that Direct Lake can prune Parquet files early. Instead of wrapping a large table in FILTER inside a measure, let slicers or page filters narrow the data first, then let the measure aggregate the reduced set.

Prefer Simple Measures Before Iterators

Begin with measures like Total Sales = SUM(Sales[Amount]) and only add iterators when business logic truly requires them. This habit results in more efficient storage engine queries and supports the overall DP‑600 Direct Lake performance optimization strategy.

Adopt Hybrid Tables for RLS‑Heavy Dimensions

Keep massive fact tables in Direct Lake, yet load sensitive dimensions in Import mode. That way, you can define complex Row‑Level Security and calculated columns on smaller tables while preserving blazing‑fast Direct Lake scans over the main facts.

Monitoring Direct Lake Storage Residency with DMV

By monitoring this DMV, you can see which Direct Lake columns sit in Hot or Warm residency and which ones remain Cold. Ideally, most high‑traffic columns, such as foreign keys and key measures, should stay Hot or Warm. If too many important columns are Cold, you can adjust partitioning, change query patterns, or increase capacity so that Direct Lake can cache more segments in memory.

DP-600 Direct Lake Capacity and Guardrail Strategy

Capacity planning is another major theme in DP‑600 questions about Direct Lake performance optimization. Even a perfectly designed model will struggle if capacity guardrails are exceeded. Therefore, you need to understand how rows, files, and memory limits interact for each Fabric F‑SKU.

| Fabric Capacity (F‑SKU) | Max Rows per Direct Lake Table | Max Parquet Files | Typical Memory | Recommended Direct Lake Usage |

|---|---|---|---|---|

| F64 | Up to 1.5 billion rows | Around 5,000 files | 25 GB | Departmental fact tables and pilot workloads |

| F512 | Up to 12 billion rows | Up to 10,000 files | 200 GB | Enterprise‑wide star schemas |

| F1024 | Up to 24 billion rows | Up to 10,000 files | 400 GB | Global, petabyte‑scale analytics models |

Whenever a Direct Lake table grows beyond these guardrails, Fabric automatically falls back to DirectQuery, even if the DirectLakeBehavior property is set to DirectLakeOnly. Consequently, ongoing governance at DP‑600 level requires you to combine capacity monitoring with good housekeeping practices—such as regular OPTIMIZE operations and removal of orphaned partitions—to keep Direct Lake tables well under the limits.

High-Level Strategy: Star Schema Design for DP-600 Direct Lake Performance

Beyond individual tables and measures, DP-600 Direct Lake performance optimization also requires a strong semantic modeling strategy. Google and real users both reward pages that explain how technical choices fit into a bigger design, so you need to show how Direct Lake lives inside a clean star schema in Fabric. By doing that, you prove you understand not only how to make queries fast, but also how to keep models scalable and maintainable over time.

For most analytics engineers, the recommended pattern is straightforward. Place large transactional data in one or more Direct Lake fact tables; then, design conformed dimensions in Import mode. As a result, the model benefits from fast Direct Lake scans across very large datasets, while the Import dimensions support advanced Row‑Level Security, calculated columns, and user‑friendly hierarchies.

- Use a Bronze → Silver → Gold pipeline so that Direct Lake always reads from high‑quality, deduplicated Delta tables.

- Partition facts by low‑cardinality attributes such as Year‑Month to simplify incremental loads and archiving.

- Define business‑friendly dimensions around Customer, Product, and Date to keep report design intuitive.

- Consider cross‑lakehouse shortcuts when multiple domains share the same conforming dimensions.

- Align refresh schedules with business SLAs so that Direct Lake semantic models stay fresh without overloading capacity.

When you combine this star schema pattern with the DP‑600 Direct Lake performance optimization techniques described earlier, you end up with a solution that scales smoothly, respects governance, and remains debuggable. That, in turn, is exactly the kind of design exam questions expect you to recognize and recommend.

DP-600 Direct Lake Exam Practice: 18 Detailed Questions and Explanations

Q1. In which situations will Direct Lake connected via a SQL endpoint automatically fall back to DirectQuery?

Answer: Direct Lake via a SQL endpoint falls back whenever the SQL layer introduces features that VertiPaq cannot respect natively. Typical examples include Row‑Level Security defined in the SQL database, non‑materialized views that require query rewriting, and tables that exceed capacity guardrails for rows or files. Because OneLake connections bypass SQL, they do not suffer from these particular triggers.

Q2. How does DirectLakeBehavior help control Direct Lake performance in DP-600 scenarios?

Answer: The DirectLakeBehavior property tells the engine what to do when a query would normally fall back to DirectQuery. The Automatic setting allows the fallback to proceed silently, DirectLakeOnly stops the query with an error, and DirectLakeOrEmpty returns an empty result. In exam questions, DirectLakeOnly is often the right choice when predictable SLAs and debugging transparency are more important than always returning data.

Q3. Why is V-Order compression so important for Direct Lake performance optimization?

Answer: V‑Order compression rearranges data inside Parquet files to be more column‑store friendly. As a result, dictionary encoding becomes more efficient, segments compress better, and VertiPaq can scan the data faster. For DP‑600 scenarios that involve multi‑billion‑row facts, V‑Order effectively reduces the memory footprint and improves cache hit rates.

Q4. What row group size does Microsoft recommend for Direct Lake, and why?

Answer: Microsoft recommends row groups between one million and sixteen million rows, with about four million rows as a practical target. If segments are too small, metadata overhead increases; if they are too large, scans become heavy and harder to cache. The recommended range strikes the right balance between metadata size, compression, and segment elimination.

Q5. How can predicate pushdown improve DAX performance on Direct Lake models?

Answer: Predicate pushdown means that filters are applied as early as possible in the query pipeline. When you use slicers and page filters instead of wrapping entire tables in FILTER expressions, Direct Lake can skip reading whole Parquet files that do not match the filter conditions. Consequently, fewer segments are touched, and VertiPaq completes the query faster.

Q6. Why does replacing text columns with integer surrogate keys matter so much?

Answer: Text fields usually create very large dictionaries because each distinct value must be stored and tracked. Integer surrogate keys have much smaller dictionaries, so VertiPaq can cache more of them in memory. This change not only speeds up joins and group‑bys but also supports overall DP‑600 Direct Lake performance optimization goals by reducing memory pressure.

Q7. When is a hybrid table strategy the best choice in a DP-600 design question?

Answer: A hybrid strategy is best when the fact table is huge but dimensions are relatively small and security‑sensitive. In those cases, placing facts in Direct Lake and loading dimensions in Import mode gives you both fast scans and flexible Row‑Level Security. Exam questions often present this pattern when you must satisfy strict regulatory requirements without sacrificing responsiveness.

Q8. How should you respond if the DMV shows many important columns in Cold residency?

Answer: A high proportion of Cold columns indicates that segments are being repeatedly read from storage rather than reused from memory. To improve the situation, you can revise partitioning, reduce unnecessary columns, adjust report usage patterns, or scale up capacity. Any of these changes can help more frequently used segments move into Hot or Warm states.

Q9. What happens if a Direct Lake table exceeds the allowed number of Parquet files for its capacity?

Answer: When file counts exceed the guardrail for a given F‑SKU, Fabric no longer allows VertiPaq to read the table as Direct Lake. Instead, the engine automatically falls back to DirectQuery, even if DirectLakeBehavior is set to DirectLakeOnly. In an exam answer, you would prevent this by periodically running OPTIMIZE and consolidating small files.

Q10. Why is OVERWRITE considered dangerous for Direct Lake performance?

Answer: OVERWRITE operations invalidate existing segment metadata and dictionaries. As a result, Direct Lake must rebuild those dictionaries the next time the table is framed, which increases CPU cost and may push columns into Cold residency temporarily. Incremental MERGE or APPEND patterns are therefore preferred for performance‑critical tables.

Q11. What is the typical use of Z-ordering in a DP-600 Direct Lake scenario?

Answer: Z‑ordering re‑clusters data on one or more high‑value columns, usually keys that appear in joins or filters. In the Direct Lake context, it increases the chance that segments containing similar key ranges end up together, making predicate pushdown more efficient. This technique pairs especially well with date or customer keys.

Q12. How does Automatic Updates affect Direct Lake refresh behavior?

Answer: Automatic Updates causes Direct Lake models to re‑frame themselves shortly after underlying Delta tables change. The refresh is usually fast, but during heavy ETL activity it can cause multiple framings in a short time. For that reason, architects sometimes disable Automatic Updates during long loads and trigger a manual refresh once the pipeline has finished.

Q13. In which case is DirectLakeOrEmpty the most appropriate setting?

Answer: DirectLakeOrEmpty is useful when returning partial or slow data would be more harmful than returning nothing. For example, a regulatory dashboard might prefer to show blanks rather than inconsistent figures while a security rule is being updated. In DP‑600, this option demonstrates that you can value data correctness and clarity over availability when needed.

Q14. Why should you target fewer than 200 Parquet files per partition?

Answer: Too many small files increase metadata overhead and slow both the Spark engine and VertiPaq. By consolidating files into larger, well‑compressed chunks, you reduce the number of I/O operations and speed up segment scanning. This best practice appears often in performance‑oriented DP‑600 discussions.

Q15. What is the main security advantage of using OneLake instead of a SQL endpoint?

Answer: OneLake allows you to manage access through item‑level permissions at the storage layer. This keeps the security story simpler and eliminates many of the fallback triggers related to SQL‑based Row‑Level Security. If governance teams are comfortable with that model, it is usually the cleanest option for Direct Lake.

Q16. When would you deliberately choose Automatic behavior instead of DirectLakeOnly?

Answer: Automatic behavior is helpful when user experience must not be interrupted and occasional slower queries are acceptable. For instance, in exploratory analytics environments, analysts might prefer to receive a slower answer rather than an error. The DP‑600 exam expects you to recognize such trade‑offs and choose the option that matches business priorities.

Q17. How do hybrid tables support a smooth migration from Import models to Direct Lake? – DP-600 Direct Lake Performance Optimization

Answer: Hybrid tables let you gradually move from traditional Import storage to Direct Lake while keeping some recent data cached in Import mode. This pattern provides a safety net during migration, because users continue to see familiar performance characteristics while you validate Direct Lake behavior on larger ranges of data.

Q18. Why is a clean star schema still essential, even with powerful Direct Lake capabilities?

Answer: Direct Lake improves how data is read, yet it does not change fundamental modeling principles. A well‑designed star schema minimizes complex joins, keeps relationships clear, and aligns with how users think about the business. Therefore, exam questions often reward candidates who combine Direct Lake technical tuning with classic dimensional modeling.

More Fabric Articles to Extend Your DP-600 Learning

Fabric Lakehouse Tutorial

Hands‑on Direct Lake implementation guide.

Fabric Data Warehouse Optimization

Delta and warehouse performance best practices.

Fabric Capacity Optimization

Plan and govern F‑SKU usage for Direct Lake.

Power BI Premium to Fabric Migration

Move existing models into Direct Lake architectures.