Microsoft Fabric vs Databricks (2026): Architecture, Pricing, Performance & Verdict

Microsoft Fabric vs Databricks – SaaS vs PaaS • OneLake vs Unity Catalog • Direct Lake vs Photon • The Final Verdict

1. Quick Verdict (TL;DR)

The Microsoft Fabric vs Databricks debate is the defining enterprise data platform comparison of 2026. While both platforms have converged—Databricks adding SQL Warehouses to attack the BI market and Fabric adding Spark runtimes to attack the Data Engineering market—their core philosophies remain distinct. Consequently, choosing the right platform isn’t about feature checklists; it is about matching the tool to your team’s DNA.

| Feature | Microsoft Fabric | Databricks (Azure) |

|---|---|---|

| Delivery Model | SaaS: Unified, Zero-Config | PaaS: Modular, High Control |

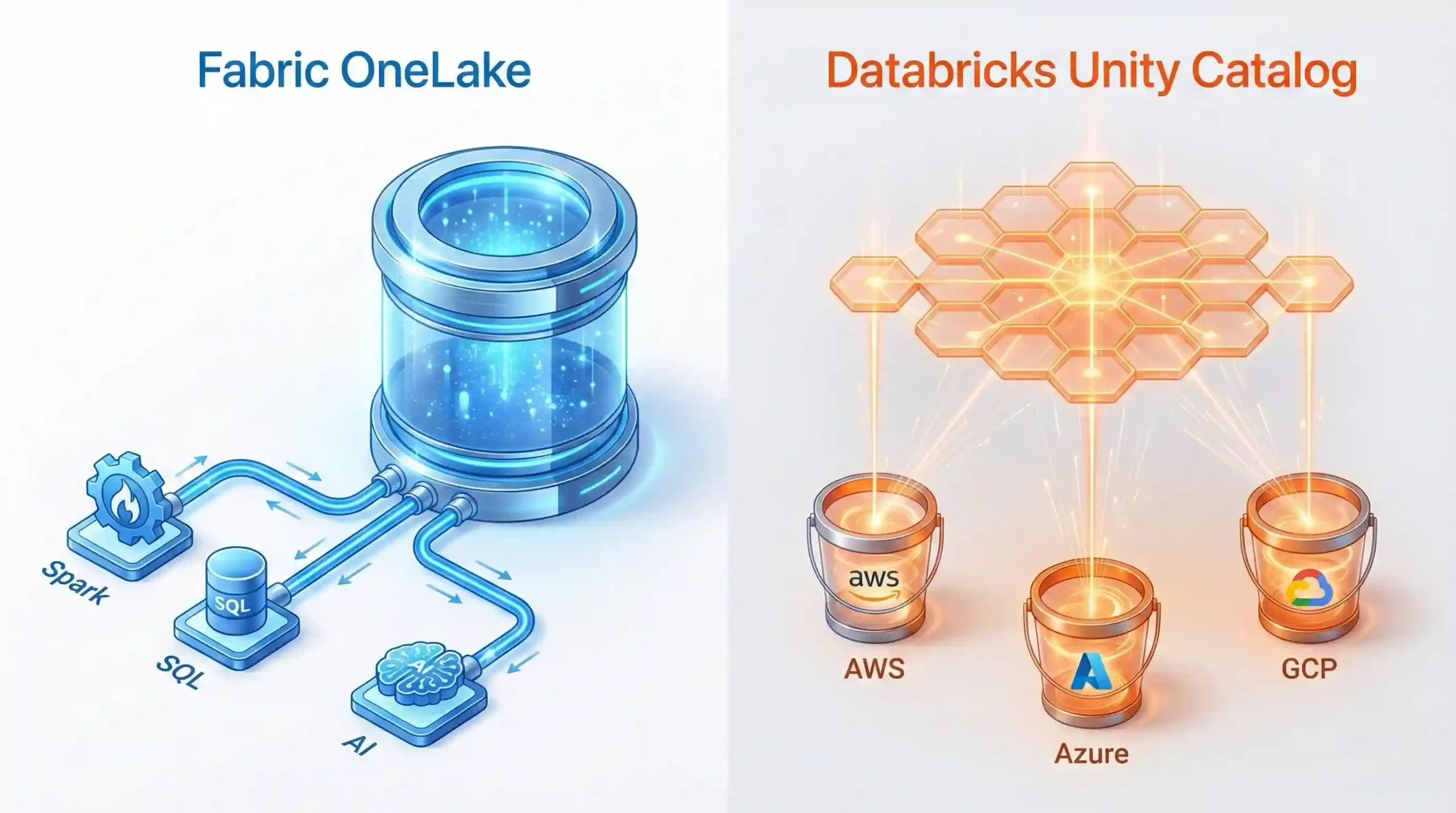

| Governance | OneLake: Physical Virtualization | Unity Catalog: Metadata Federation |

| Power BI | Direct Lake: No Import Refresh | DirectQuery: Compute Cost per Click |

| Multi-Cloud | Azure-Controlled: External data via Shortcuts | Cloud-Agnostic: Native AWS/GCP/Azure |

For a broader view of the ecosystem, you can also see how these platforms compare to other competitors in our Microsoft Fabric vs Snowflake Comparison and Fabric vs Synapse Guide.

2. Microsoft Fabric vs Databricks Architecture: SaaS vs. PaaS

When evaluating Microsoft Fabric vs Databricks, the most critical differentiator is the delivery model. Microsoft Fabric operates as a true SaaS (Software as a Service) platform, often referred to as the “Office for Data.” This means there are no clusters to provision, no VNETs to configure manually, and upgrades happen automatically in the background. For teams transitioning from Fabric Overview tutorials, this simplicity is a major selling point.

In contrast, Databricks operates as a PaaS (Platform as a Service). This distinction fundamentally changes the engineer’s responsibility, as outlined in the official Databricks Architecture Documentation.

- Virtual Network Injection: Unlike Fabric’s managed endpoints, Databricks workspaces can be deployed directly into a customer-managed Azure Virtual Network. This architecture provides network security teams with granular control over Network Security Groups (NSGs) and firewall rules, a requirement for many banking and defense sectors.

- Runtime Versioning: Enterprise teams can pin production workloads to specific “Long Term Support” (LTS) runtimes, such as Runtime 13.3. This ensures that a background update from the vendor does not silently break critical pipelines—a control that SaaS platforms often sacrifice for convenience.

- Multi-Cloud Strategy: Databricks offers a unified control plane across Azure, AWS, and GCP. If your architecture demands true cloud agnosticism, Databricks allows you to move compute workloads seamlessly. Fabric focuses heavily on the Azure ecosystem, connecting to other clouds primarily via storage shortcuts.

3. Security & Governance: OneLake vs Unity Catalog

Source: Microsoft Learn – OneLake Architecture (Jan 2026)

Governance is where enterprise requirements become strict. Databricks Unity Catalog is a mature, federation-first governance layer. It manages permissions (ACLs), lineage, and discovery across all your workspaces and clouds from a single interface. It excels at fine-grained control for complex engineering teams, offering row-level and column-level security that persists regardless of which workspace is querying the data.

Microsoft Fabric takes a different approach with OneLake. It acts as a single, logical data lake for the entire tenant. Security is increasingly handled via integration with Microsoft Purview. For organizations already using Purview for Information Protection (sensitivity labels), Fabric offers seamless inheritance of those policies—something Databricks requires additional configuration to achieve.

4. Microsoft Fabric vs Databricks for Power BI

If Power BI is your primary visualization tool, Fabric has a distinct architectural advantage called Direct Lake. This mode allows the Power BI engine to read Delta Parquet files directly from OneLake memory, as detailed in the official Microsoft Direct Lake overview.

Specifically, this enables:

- Zero Data Movement: First, you do not need to copy data into a proprietary Power BI format. The engine reads the Delta Parquet files directly.

- Near-Real-Time Updates: Furthermore, reports reflect data changes immediately after ETL jobs finish, reducing latency to near zero.

- Shared Capacity Cost: Finally, queries use the same F-SKU capacity you use for data engineering, rather than requiring separate premium capacity. Note that this is not “free,” but it consolidates billing.

On the other hand, Databricks connects to Power BI via DirectQuery or Import Mode. While effective, DirectQuery introduces network latency and incurs compute costs (DBUs) for every user interaction. Import mode offers speed but creates data silos and latency issues associated with scheduled refreshes.

5. Compute Engines: Polaris vs. Photon

For heavy data science workloads, the compute engine matters. Databricks utilizes the Photon engine, a C++ vectorized execution engine built from the ground up for speed. Photon excels at massive joins and aggregations, often outperforming standard Spark runtimes by significant margins on petabyte-scale data.

In comparison, Microsoft Fabric uses Polaris, an optimized version of open-source Spark that separates compute from storage entirely. Its “Starter Pools” feature drastically reduces startup times (5-15 seconds), making it feel serverless. However, it is important to note: While Polaris improves Spark usability and startup latency, it does not currently match Photon’s raw execution speed for large-scale analytical joins. Especially for highly vectorized, CPU-intensive analytical workloads. For tips on tuning Fabric Spark, see our guide on Spark Shuffle Partition Optimization.

6. Machine Learning & GenAI Capabilities

As AI becomes central to data strategies, the platform’s ability to support ML lifecycles is crucial.

Databricks: The AI Native

Databricks is the creator of MLflow, the industry standard for ML lifecycle management. With the acquisition of MosaicML (Mosaic AI), Databricks has positioned itself as the premier platform for training and fine-tuning Large Language Models (LLMs). It offers native vector databases, model serving endpoints, and deep integration with popular frameworks like Hugging Face.

Fabric: The Applied AI Challenger

Fabric integrates with Azure Machine Learning and Azure OpenAI Service via Synapse Data Science. While you can train models using Spark, Fabric focuses more on “Applied AI”—making it easy to consume pre-built AI models (like Azure OpenAI) within data pipelines. For teams building custom GenAI agents from scratch, Databricks currently offers a more robust tooling ecosystem.

7. Real-Time & Streaming Analytics

Modern lakehouse architecture comparisons often hinge on streaming capabilities. Databricks relies on Spark Structured Streaming, a robust and industry-standard framework for processing live data. It allows for complex stateful processing, watermarking, and exactly-once semantics. However, it requires deep Spark knowledge to tune and maintain effectively.

Microsoft Fabric simplifies this with the Real-Time Intelligence workload (formerly Kusto/ADX). Using “Eventstreams,” users can ingest data from IoT hubs, Kafka, or CDC sources without writing code. For teams that need to build real-time dashboards without managing Spark clusters, Fabric offers a significantly lower barrier to entry.

8. CI/CD & DevOps: The Developer Experience

This is often the deciding factor for engineering leads. Databricks embraces a “Code-First” philosophy. With Databricks Asset Bundles (DABs), engineers can define their entire infrastructure as code (IaC) and deploy it via standard CI/CD pipelines (GitHub Actions, Azure DevOps). The local development experience via the Databricks VS Code extension is mature and reliable.

Fabric is adopting a “Low-Code” friendly approach. It offers Deployment Pipelines—a visual interface for promoting content from Dev to Test to Prod. While Fabric now supports Git integration, the experience is less granular than Databricks. For example, resolving merge conflicts in Fabric semantic models or Dataflows can still be challenging compared to standard code files.

9. Microsoft Fabric vs Databricks Pricing Models

Comparing costs is notoriously difficult because the units differ. To help with this, we built a free Microsoft Fabric Pricing Calculator.

| Pricing Aspect | Microsoft Fabric (F-SKU) | Databricks (DBU) |

|---|---|---|

| Billing Model | Capacity Units (CU): A reserved pool of power. | Databricks Units (DBU): Consumption per second. |

| Hidden Cost | Paying for unused capacity during nights/weekends. | The “Double Bill”: You pay DBU + Cloud VM costs. |

10. Hybrid Reference Architecture (Better Together)

Many enterprise architects mistakenly believe they must choose one winner. In reality, the most robust enterprise architectures often use the “Better Together” pattern.

Common Hybrid Workflow:

- Ingest & Process (Databricks): First, use Databricks for complex ingestion, bronze-to-silver transformations, and heavy Python/ML workloads. This leverages the strength of DABs and the Photon engine. Write the final data to ADLS Gen2.

- Virtualize (Fabric): Next, use Fabric Shortcuts to mount that ADLS Gen2 data into OneLake without moving it. This makes the data instantly available to the Fabric ecosystem.

- Serve & Analyze (Fabric): Finally, use Fabric Direct Lake to serve that data to Power BI users with near-zero latency and zero data movement.

11. Which Platform Fits Your Team? (Persona Guide)

For Data Engineers

If you live in VS Code, write complex Python/Scala transformations, and manage infrastructure as code, Databricks is your home. The control it offers is unmatched.

For Analytics Engineers

If your goal is to get data into Power BI as fast as possible using SQL or low-code Dataflows, Fabric is the winner. The integration reduces friction significantly.

When NOT to Use Microsoft Fabric

- Ultra-Low Latency ML Inference: If you need sub-millisecond model serving for a consumer app.

- Strict Multi-Cloud Isolation: If you need to run the exact same code on AWS and Azure without changes.

- Heavy GPU Training: For massive LLM pre-training, Databricks Mosaic AI is superior.

12. In-Depth FAQ -Microsoft Fabric vs Databricks

For many organizations focusing on BI and standard data engineering, yes. If your primary output is Power BI and your transformations are SQL or standard PySpark, Fabric is sufficient. However, for specialized, high-scale engineering and complex ML workloads (like training LLMs), Databricks remains the superior tool.

Yes. As of 2026, Databricks exposes Unity Catalog metadata through open APIs, and Microsoft Fabric can integrate with Databricks data via Mirroring or direct Delta access through OneLake—without duplicating data.

Fabric is significantly better for citizen developers. Its integration with Dataflow Gen2 offers a Power Query experience that business users already know from Excel.

While Fabric Spark is stable, migrating legacy code can trigger errors. We have documented fixes for common issues like Py4JJavaError when saving Delta tables.

No. DLT is a proprietary Databricks feature. Fabric users typically replace DLT with Fabric Notebooks or Data Pipelines, but there is no direct “lift and shift” equivalent for the declarative DLT syntax.