Comprehensive overview 2025: platform components, integration patterns, operational guidance, and curated learning links to accelerate adoption of Microsoft Fabric.

Summary Executive summary: Microsoft Fabric Overview and what it delivers

Microsoft Fabric is a unified analytics platform that brings together Lakehouse storage (OneLake), Dataflow Gen2, notebooks, Eventstream, Data Mirroring, SQL Warehouse, Data Pipelines, and Power BI integrations. Consequently, teams can build end-to-end data products with reduced integration overhead, faster time-to-insight, and consistent governance. Fabric emphasizes an integrated developer and analyst experience, while still enabling advanced scenarios via Spark, Delta Lake, and external connectors.

This overview highlights practical patterns, operational guidance, and companion tutorials to help you adopt Fabric responsibly and efficiently.

Stack Platform components explained – Microsoft Fabric Overview

OneLake & Delta Lake

OneLake is Fabric’s unified storage layer; it uses Delta Lake for ACID, time-travel, and versioned tables, making it the canonical data store for analytics and governance.

Notebooks

Notebooks provide Spark-powered, narrative-first development for large-scale transforms, machine learning features, and deterministic pipelines; see the Transform Notebooks guide for patterns.

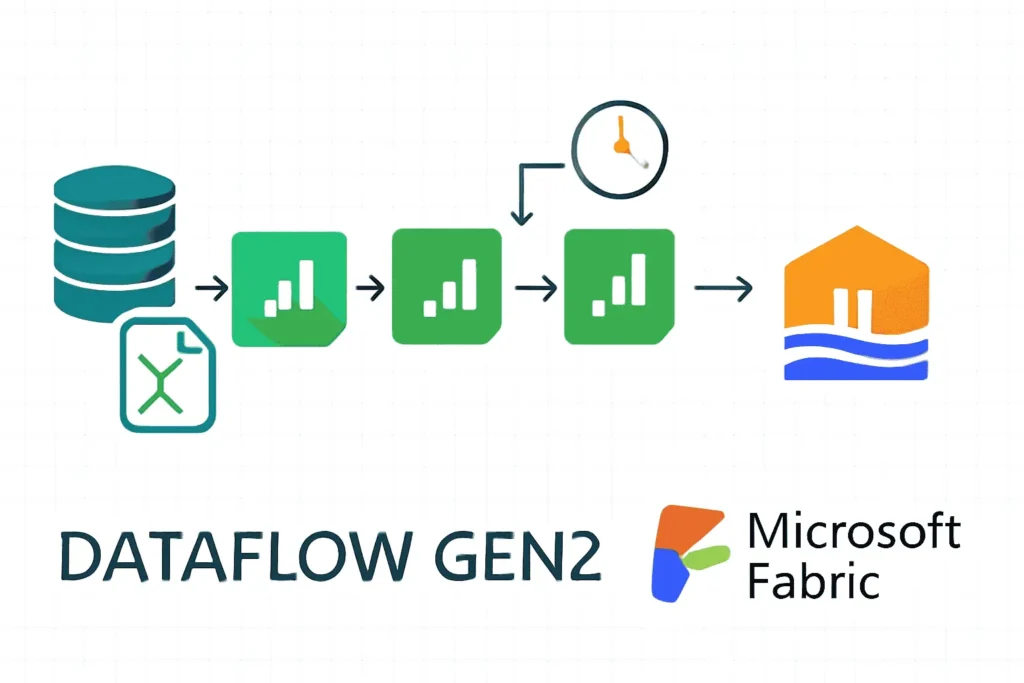

Dataflow Gen2

Dataflow Gen2 offers visual ETL/ELT and mapping; it’s ideal for repeatable cleanses and lightweight enrichment, with direct Delta outputs.

Data Pipelines

Data Pipelines orchestrate notebooks, dataflows, and activities with scheduling, retries, and parameterization for reliable production workflows.

Eventstream

Eventstream handles low-latency event ingestion, inline processing, and routing to OneLake, messaging, or webhooks for real-time analytics.

Data Mirroring

Data Mirroring supports near-real-time replication from databases into OneLake, enabling analytics on operational data with CDC semantics.

Finally, Fabric integrates governance, cataloging, and security controls (Entra RBAC, masking, audit logs) to meet enterprise requirements.

Flow How the Fabric pieces fit together in real projects – Microsoft Fabric Overview

A typical Fabric pipeline combines multiple services: ingest events or CDC into OneLake (via Eventstream or Data Mirroring), land raw data, run deterministic transforms in Notebooks or Dataflow Gen2, persist curated Delta tables, and serve them via SQL Warehouse or Power BI semantic models. Therefore, Fabric encourages a two-stage model—raw then curated—to preserve auditability and to simplify debugging.

Example end-to-end flow

1. Real-time events → Eventstream → lakehouse.raw_events 2. Operational DB changes → Data Mirroring → mirrored_db.* 3. Daily transforms → Notebooks or Dataflow Gen2 → curated Delta tables 4. Orchestration → Data Pipelines schedule and monitor steps 5. Consumption → SQL Warehouse / Power BI datasets / ML feature tables

In short, this modular approach lets teams choose the right tool for each job while keeping a single, governed data plane.

Patterns Common patterns and recommended combinations – Microsoft Fabric Overview

Use these proven patterns to accelerate delivery and to reduce risk.

Two-stage ingestion and transform

First, land raw events or mirrored changes into a raw zone; next, apply deterministic transforms in notebooks or Dataflow Gen2 to produce curated Delta tables. Consequently, you improve reproducibility and allow safe backfills.

Streaming + micro-batch hybrid

For low-latency use cases, route events via Eventstream to a near-real-time sink, and then run micro-batch notebooks to compute aggregates and features. This pattern balances latency with cost and operational complexity.

Feature tables and ML readiness

Persist reproducible, versioned feature tables in Delta with clear contracts; then use notebooks and pipelines to refresh features and to trigger model scoring. See the RAG and ML guides for trustworthy AI patterns in Fabric.

Migrate Migration, coexistence, and hybrid strategies – Microsoft Fabric Overview

When moving from legacy warehouses or Synapse, run a phased migration: inventory schemas and pipelines, ingest historical data into OneLake as Parquet/Delta, parallel-run queries and reports for validation, and then cut over models and BI datasets. Alternatively, keep Synapse for highly tuned legacy warehouses while adopting Fabric for new analytics and event-driven workloads.

Coexistence tips

- Share curated Delta outputs via ADLS/OneLake for cross-platform consumption

- Use Data Pipelines and connectors to integrate Synapse and Fabric jobs

- Validate SLAs and consistency with reconciliation jobs before cutover

Cost Cost, capacity, and optimization guidance – Microsoft Fabric Overview

Fabric pricing revolves around capacity units and managed endpoints; therefore, control costs by right-sizing compute, scheduling heavy maintenance off-peak, and using incremental transforms to avoid full-table rewrites. Additionally, monitor usage with capacity optimization practices to reclaim idle resources and to tune concurrency.

Practical tips

- Use representative POCs to model costs for ETL, queries, and concurrency

- Schedule OPTIMIZE and compaction during low-traffic windows

- Use result caching and semantic layers to reduce repeated scans

- Review Microsoft Fabric Capacity Optimization guidance for reclaiming unused capacity

Operate Operational best practices and production stability

Operational maturity includes CI/CD for notebooks and dataflows, monitoring run metrics, and a clear incident response runbook. For stability, parameterize runs, keep deterministic pipelines separate from exploratory work, and require PR reviews for production changes.

Stability checklist

- Store notebooks and SQL scripts in Git and validate with lightweight integration tests

- Capture lineage, run metadata, and table versions for audit and rollback

- Implement alerting for failed pipelines, replication lag, and unusual volume changes

- Adopt capacity optimization and profiling to avoid noisy neighbor issues

For deep operational guidance, see our production stability review and optimization articles linked below.

Learn Curated learning path and companion articles

Follow this curated path to gain practical, end-to-end expertise in Fabric.

- Fabric Lakehouse Tutorial — fundamentals of OneLake and Delta

- Transform Data Using Notebooks — ETL/ELT and reproducible pipelines

- Data Pipelines in Fabric — orchestration and CI

- Dataflow Gen2 — low-code mapping

- Data Warehousing in Fabric — modeling and serving

- Data Mirroring in Fabric — CDC and replication

- Eventstream in Fabric — streaming and routing

- Fabric vs Synapse comparison — platform decision guidance

- RAG & trustworthy AI, and Power BI AI guides for analytics and AI-driven reporting

- Production stability review — deep ops and reliability patterns

FAQ Frequently asked questions

Is Microsoft Fabric suitable for enterprise analytics workloads?

Yes. Fabric is designed for enterprise analytics with integrated governance, capacity management, and scalable compute; however, validate SLAs and feature completeness for critical workloads and consider hybrid patterns for legacy, highly tuned warehouses.

How do I get started quickly with Fabric?

Start with the Fabric Lakehouse tutorial to set up OneLake, then build a simple ETL with Dataflow Gen2 or a notebook, orchestrate with Data Pipelines, and publish a Power BI dataset for consumption.

How do I keep costs under control?

Right-size capacity, schedule heavy maintenance off-peak, use incremental processing, and apply capacity optimization practices. Run a short POC with representative workloads to model cost realistically.

Where can I find production-ready patterns and examples?

Use the companion articles listed above for focused patterns: ETL with notebooks, dataflows, mirroring, eventstreaming, warehousing, and production stability guidance.

Links Companion tutorials and resources

- Eventstream in Fabric

- Data Mirroring in Fabric

- Dataflow Gen2 in Fabric

- Data Warehousing in Fabric

- Transform Data Using Notebooks

- Microsoft Fabric Overview (canonical)

- RAG & Trustworthy AI in Fabric

- Power BI AI Ready

- Power BI DAX with AI

- AI in Power BI

- Production Stability for Fabric

- Fabric vs Azure Synapse

- Microsoft Fabric Data Agent

- Fabric Capacity Optimization

- Power BI Premium to Fabric Migration

- Fabric Data Warehouse Optimization

- Fabric Lakehouse Tutorial

- Data Pipelines in Fabric