Data Pipelines in Fabric Tutorial – Complete 2025 Guide to Pipeline Activities and Triggers

Welcome to this detailed Data Pipelines in Fabric Tutorial. We delve into the full set of Pipeline activities available in Microsoft Fabric Data Factory, including all 2025 updates. You will learn how to use each activity optimally, from data movement and transformations to control flow, triggers, and database routines like stored procedures. This guide ensures readers understand both the “how” and “why” behind every pipeline component for superior data engineering orchestration.

Table of Contents

- Introduction to Microsoft Fabric Data Pipelines

- Trigger Types: Manual, Scheduled, and Event-Based

- Copy Activity: Core Data Movement

- Dataflow Activity: Visual Data Transformations

- Execute Pipeline: Modular and Nested Workflows

- Lookup Activity: Data-driven Conditional Logic

- ForEach Activity: Looping Over Collections

- Wait Activity: Control Delays and Pacing

- Web Activity: REST API Integrations

- Spark Job Activity: Running Custom Spark Jobs

- Stored Procedure Activity: Calling Database Procedures

- Until Activity: Conditional Looping

- Delete Activity: Cleanup Data and Files

- Append Variable Activity: Dynamic Arrays

- Set Variable Activity: State Management

- If Condition Activity: Branching Logic

- Switch Activity: Multi-way Branching

- Security and Monitoring

- Best Practices for Pipeline Development

- Further Learning and Official Docs

Introduction to Data Pipelines in Fabric

Microsoft Fabric Data Pipelines automate and orchestrate data workflows for ingestion, transformation, and movement across Microsoft Fabric services. Pipelines consist of configured activities executed in sequence or parallel, triggered manually, on schedules, or by events. This tutorial covers these facets in detail, making it your go-to guide for mastering Fabric Pipelines in 2025.

Trigger Types: Manual, Scheduled, and Event-Based

Pipelines in Microsoft Fabric can be triggered in various ways:

- Manual Triggers: Run a pipeline on demand using the UI or REST API requests.

- Schedule Triggers: Automatically run at defined intervals (e.g., hourly, daily).

- Event-Based Triggers: Trigger pipelines based on events like new files appearing in OneLake or job completion.

These options provide extensive automation flexibility for your workflows.

Copy Activity: Core Data Movement

Move data between over 90 source and sink connectors. Supports batch and incremental loads with watermarking for efficiency.

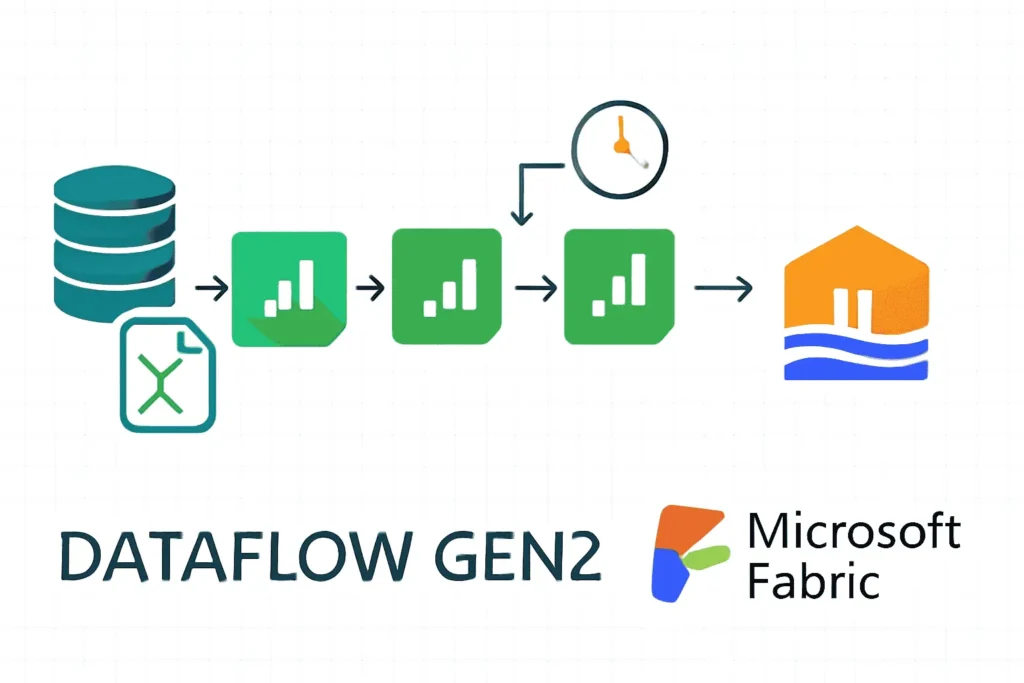

Example: Efficiently copy CSV files from Azure Blob Storage into OneLake as Parquet with retry and error handling configuration.Dataflow Activity: Visual Data Transformations

Low-code design of complex transformation pipelines running on managed Spark clusters with capabilities like joins, filtering, aggregation, and schema drift handling.

Execute Pipeline: Modular and Nested Workflows

Invoke other pipelines within a pipeline to enable modular, reusable workflow components with parameter passing and wait-for-completion control.

Lookup Activity: Data-driven Conditional Logic

Retrieve data from datasets or external sources to dynamically influence pipeline flow or variables.

ForEach Activity: Looping Over Collections

Run a set of activities repeatedly over array items sequentially or in parallel—crucial for processing batches of files or records.

Wait Activity: Control Delays and Pacing

Pause for a specified duration to coordinate pipeline timing with external system readiness or prevent throttling.

Web Activity: REST API Integrations

Send HTTP requests as part of pipelines to integrate external applications, trigger workflows, or call microservices.

Spark Job Activity: Running Custom Spark Jobs

Submit custom Spark batch jobs (PySpark, Scala, R) within pipeline orchestration for advanced transformations or ML workloads.

Stored Procedure Activity: Calling Database Procedures

Execute SQL stored procedures across supported SQL platforms such as Fabric SQL DB, Azure SQL, Synapse, and others. Essential for encapsulating heavy database logic or validations and can accept input parameters dynamically.

Uses include: Incremental ETL logic, data validation, audit logging performed close to the data source for higher efficiency.Until Activity: Conditional Looping

Keep executing inner activities until a specified condition is met, useful for polling or awaiting external state changes.

Delete Activity: Cleanup Data and Files

Remove files or folders in Lakehouse storage or other supported data stores, used for data lifecycle management or error recovery.

Append Variable Activity: Dynamic Arrays

Add elements to array variables during pipeline execution to accumulate values or lists that influence subsequent logic.

Set Variable Activity: State Management

Set or update values of variables during pipeline execution, foundational for dynamic control flow.

If Condition Activity: Branching Logic

Conditionally execute activities based on boolean expressions—enabling robust pipeline branching.

Switch Activity: Multi-Way Branching

Execute different branches of activities depending on evaluated expressions with multiple possible outcomes.

Security and Monitoring

Fabric Data Pipelines leverage Azure Entra ID for authentication, role-based access control for pipeline security, and Key Vault integration for managing secrets. Monitoring is via pipeline execution logs, alerts, and integration with Azure Monitor for telemetry.

Best Practices for Pipeline Development – Data Pipelines in Fabric

- Use modular pipeline design with Execute Pipeline for reusability.

- Parameterize pipelines to handle different environments and dynamic conditions.

- Utilize incremental copy and watermarking to optimize cost and performance.

- Implement robust error handling and logging for troubleshooting.

- Monitor and tune pipeline concurrency and resource utilization using Fabric Capacity dashboard.

Further Learning and Official Documentation – Data Pipelines in Fabric

Related Tutorials on Microsoft Fabric – Data Pipelines in Fabric

-

Dataflow Gen2 in Fabric – Microsoft Fabric Tutorial Series

An in-depth tutorial series on Dataflow Gen2 containing connectors, transformations, and best practices.

-

Fabric Lakehouse Tutorial – Microsoft Fabric Tutorial Series

A comprehensive tutorial covering Fabric Lakehouse design, data governance, and optimization strategies.

These tutorials complement the Fabric Data Pipeline concepts and help deepen your understanding of Microsoft Fabric’s ecosystem.